|

Current state-of-the-art language learners require annotated corpora as training data. However, constructing such corpora is difficult and time-consuming. On the other hand, children acquire language through exposure to linguistic input in the context of a rich, relevant, perceptual environment. By connecting words and phrases to objects and events in the world, the semantics of language is grounded in perceptual experience (Harnad, 1990). Ideally, a machine learning system could learn language in a similar manner. Our ultimate goal is to build a system that can exploit the large amount of linguistic data available naturally in the world with minimal supervision.

|

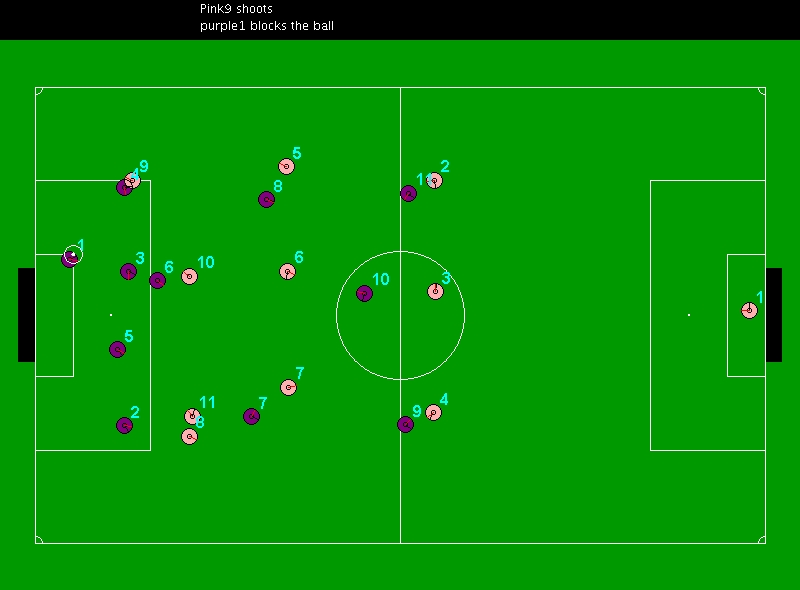

Although there has been some interesting computational work in grounded language learning (Roy, 2002; Bailey et al., 1997; Yu & Ballard, 2004), most of the focus has been on dealing with raw perceptual data and the complexity of the language involved has been very modest. To help make progress, we study the problem in a simulated environment that retains many of the important properties of a dynamic world with multiple agents and actions while avoiding many of the complexities of robotics and vision. Specifically, we use the Robocup simulator which provides a fairly detailed physical simulation of robot soccer. Our immediate goal is to build a system that learns to semantically interpret and generate language in the Robocup soccer domain by observing an on-going commentary of the game paired with the dynamic simulator state. While several groups have constructed Robocup commentator systems (Andre et al., 2000) that provide a textual natural-language (NL) transcript of the simulated game, their systems use manually-developed templates and are incapable of learning.

Our demo can be accessed at this link

|