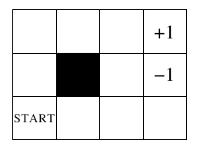

The world looks like this:

The agent starts in the bottom left corner and has to reach the top right corner. We use a coordinate system (0,0) in the starting state and (3,2) in the goal state.

Due date: Monday March 3 2014

We use a Grid World introduced by Parr and

Russell to evaluate the behavior of an agent in a stochastic

partially-observable domain. Robotic domains are usually both

stochastic (because actions may fail and lead to several different

outcomes), and partially-observable (because the robot sees the

world through it's sensors, and can always see only a portion of

it).

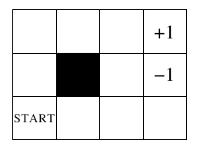

The world looks like this:

The agent starts in the bottom left corner and has to reach the

top right corner. We use a coordinate system (0,0) in the starting

state and (3,2) in the goal state.

Actions: the actions available to the agent in the plan

are ActMove_N, ActMove_E, ActMove_S, and ActMove_W.

Perceptions: the agent can perceive whether or not there is

an obstacle in the square that is immediately east and west from

it. There are four possible combinations: no obstacle on east and

no obstacle on west, obstacle on east but not obstacle on west, no

obstacle on east but obstacle on west, obstacle on both sides.

These for conditions are represented by the following four atomic

conditions which can be used in the plan: LF_RF, LF_RO,

LO_RF, LO_RO. Furthermore, the agent can perceive whether or not

it is in a final state, testing the atomic condition FinalState.

Both (3,2) and (3,1) are final states.

Transitions: when an agent chooses an action and perform

it, the environment transitions to the next state. We will

consider two version of the transitions, one is deterministic,

while the other one is stochastic. With deterministic

transitions actions always succeed. Therefore, if an agent perform

the action ActMove_N it will always move one step north, unless

there is an obstacle in that direction, in which case it will stay

put. With stochastic transitions, however, each action has 0.8

probability of success and a 0.1 probability of moving the agent

towards one of two orthogonal directions. Therefore, if the agent

performs the actions ActMove_N it will move one step north with

probability 0.8, one step east with probability 0.1, and one step

west with probability 0.1. After having determined the direction,

if there is an obstacle in that direction the agent stays put.

Cost: Each action costs the agent 1/MAX_N where MAX_N is

the maximum number of actions allowed. In our implementation MAX_N

= 30. Reaching the goal state (3,2) rewards this agent with a +1,

while reaching the state (3,1) penalizes the agent by -1. Note

that (3,1) is also a terminal state, so once the agent ends up

there there is no way out. For instance, if an agent reaches the

goal in 7 steps, it's total reward will be 1 (for the goal) - 7/30

= 0.7666. If the agent ends up in the trap (3,1) in 4 steps its

reward is -1 - 4/30 = -1.1333. The maximum possible reward is

obtained by reaching the goal in 5 steps and is 1 - 5/30 =

0.83333. The worst possible reward is obtained by reaching the

trap in 30 steps (the maximum number of steps) and is -2.

The software you need is:

pnp.zip - PNP Library. We have covered in

class how to compile it and set the environment variables

PNP_INCLUDE and PNP_LIB. Generate the documentation with doxygen

to see a quick reference guide on how to use PNP with your domain.

gridworlds.zip - an implementation

of the domain described above.

helloworld.zip - an implementation of a simple hello world plan, that you can use as an example of the classes you need to implement to connect PNP to a given domain.

ultrajarp.zip - the editor for plans.

The main file in gridworld has a couple parameters you can set:

Write a plan for the deterministic version of the domain, that is assuming that the actions always succeed.

Create two ROS nodes, one that wraps PNP, and the other one that

runs Gridworld.