Geometry-Guided 3D Human Pose Estimation From a Single Image

Liyan Chen, Zhe Wang, Charless Fowlkes

Abstract

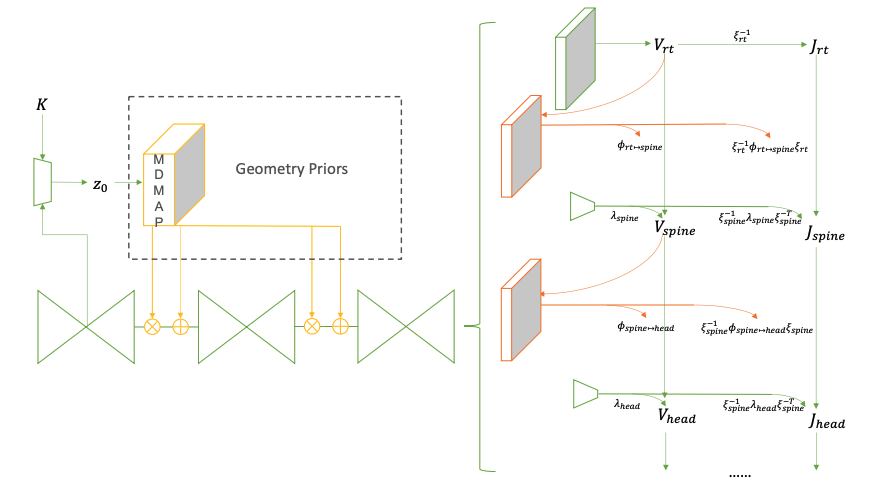

Estimation of 3D human pose from a single image is an ill-posed problem, which is made further difficult by occlusion and appearance ambiguities. We explore techniques for injecting contextual knowledge about scene geometry which can impose strong physical constraints on the configuration of allowable poses to improve the quality of pose estimation. We exploit two aspects of scene geometry – global compatibility and static stability – which are incorporated with an internal representation of human poses derived from its geometric mechanics. First, we introduce a probabilistic model that can express the compatibility of predicted human poses against a given geometry scene. Along with the geometric priors, the global compatibility serves as repulsive loss during training. Second, we introduce static stability of poses as a prior derived from supporting forces implied by a geometric scene which serves as a contractive loss. The pose is represented as a jointly Gaussian distribution composed of a root distribution and chains of $SE(3)$ transformations so that the model learns inter-joint relations and can propagate geometry interactions from limb ends to the whole body. We show that our contributions lead to improved accuracy of the models on the GeometricPoseAffordance dataset.

Publications

Geometry-Guided 3D Human Pose Estimation Froma Single Image

Liyan Chen, Zhe Wang, and Charless Fowlkes. To be submitted

Implementation Supplementary