Atari Video Game Learning: Notebook

2/5/2010

Wrote a very simple python program whose purpose was to create a feature determining if the agent should move left or right in any given linear gridworld situation. As input, the program takes positive examples of when the action Move Right lead to an immediate reward as well as examples when the action Move Left lead to an immediate reward. For example, the following are linear gridworld instances in which a Move Right action would lead to the agent A reaching the goal G and receiving a reward:

|___AG_|, |AG____|, |_____AG|, |_AG___|

|___GA_|, |GA____|, |_____GA|, |_GA___|

As soon as a satisfying feature has been found, it is then possible to apply this feature to a new instance of a linear gridworld to determine the optimal action to take. While this prospect is interesting, the manner in which features were computed is far from general enough to solve anything other than linear gridworlds.

2/8/2010

Finished meeting with Peter today who suggested I read several papers relating to the project. Here they are:

- Sherstov and Stone: Improving Action Selection in MDP's via Knowledge Transfer

- Konidaris and Barto: Building Portable Options: Skill Transfer in Reinforcement Learning

- Konidaris and Barto: Autonomous Shaping: Knowledge Transfer in Reinforcement Learning

4/13/2010

Discussions with Peter have shifted towards the problem of learning the effects of an agent's actions. Specifically, assume that you are given a gridworld problem where the raw state representation is an overhead view containing the raw pixels one would see if you were playing the gridworld as if it was a video game. In this representation the agent is some square block of pixels with side length at least 1. The problem was to create a model of the agents interactions with the environment such that the agent could predict the effects of its actions (namely by being able transform one pixel image to another given a certain type of action). To attack this problem I wrote a rudimentary feature induction engine which would attempt to generate subroutines which, when run on the input pixel image, would output another pixel image corresponding to what the original would look like after the action in question was taken. This program, ftIndEng.py, was able to create subroutines consisting of declarative statements, variable declarations, conditionals, and loops. While the program was certainly capable of generating subroutines which could solve the problem in a general sense, the problem was the odds that it generated such as working (and sufficiently general) subroutine were somewhere on the order of 1 in a billion. As python is not the fastest language out there, it was clear another approach would have to be formulated. Some associated readings:

- Littman, Sutton, Singh - Predictive Representations of State

- Pierce and Kuipers - Learning to explore and build maps

- Stronger and Stone - Maximum Likelihood Estimation of Sensor and Action Model Functions on a Mobile Robot

4/21/2010

The following are random, mostly unorganized musings on the problem of feature induction: as such they are not fully finished ideas and likely have errors. Read them at your own risk.

One type of problem is addressed by McCallum's CRF. Essentially we have an input set of features as well as labeled tuples. We wish to find a feature which is statistically correlated with the correct label. Essentially we seek a single feature which correlates exactly with the output label. This essential question is "what is common about all of the instances for which there is a given output label?"

Model learning formulation: we have some input sensor data as well as an action we wish to model. Given start state s2, action, and result state s2, we wish to be able to predict s2 given s1 and action. Alternatively we wish to be able to model the effect of the action: eg predict what effect on state it will have. We look for an invariant amounst all the actions necessary to transform s1 into s2.

This is essentially asking what is in common about these sequence of things.

I want to be able to recursively solve this common subproblem at different levels.

The problem of determining which actions lead to reinforcement is also one of identifying the common about the sequence of events leading up to any positive reinforcement cue.

Note that its arbitrarily hard to compute the invariant of some problem. There are absolutely no guarantees in the case of arbitrarily hard problems. Consider the case of noise, in which case there is nothing to be found, nothing to be learned. Over any decent period of time there will be no sustainable correlations. (Or perhaps this is a correlation?) Nah, I'm a constructivist, proving that we cant find a correlation isnt helpful in the problem of trying to find a correlation.

So an agent put interfaced with the real world has absolutely no gaurantees about discovering useful features, simply because state has existed long before the agent and predictions could depend on past state long before the agent existed. Practically however, the agent should be able to solve problems in practice.

1/6/2011

One of the fundamental challenges to a learning agent is causality. An agent perturbs the world and needs discover the consequences. It receives reward or punishment and must decipher why. These functions are likely arbitrarily complex, yet probably approximable. Each action an agent performs affects (or fails to affect) the true state of the world which may or may not become apparent to an agent through its sensation. The manner in which that effect manifests itself can be described as a function on the true world state. It is the job of the agent to approximate these functions as well as possible.

The agent derives feedback from the environment or internal mechanism. This feedback is a function computed at each step based on the true state of the world (or perhaps the agent's perception of the true world state). It is the job of the agent to interpret this feedback and understand the causes from which it arose. These feedback functions too may be arbitrarily complex, but likely approximable.

These are two fundamental challenges to an agent's learning. Good solutions to these problems are necessary but not sufficient for a complete learning agent.

6/14/2011

This project has more or less changed directions, but I'll keep the previous notebook posts here anyhow. We have specifically switched the goal of the project to learning on Atari games. That said, I'm let's jump straight in... One of the main issues we will have to deal with is the non-markovian nature of many Atari games. Yavar Naddaf acknowledges this problem in his thesis, citing an example of Space Invaders' agent being unable to tell whether a bullet is going up or down. He goes on to say that one possible solution is to include the last screenshot (frame) in the current state. This would work for disambiguating the direction of objects that move every timestep, but could run into problems with objects that move more slowly. It may be necessary to allow the agent to search through some finite amount of its history.

One idea that I'm considering is hand coding a predictive model of the environment. This would allow me to understand the difficulty of predicting next states based on current states. It would also give me a good idea of what types of things the agent would need to be able to learn.

6/22/2011

I've finished the handcoded predictive environmental model. I decided to go with the game Freeway since its about as easy as things get. Overall the handcoded model was tough to write mainly because the pixel state had been discretized into smaller chunks whose movement was somewhat irregular. At this point there are several directions I want to explore:

- Close the loop by hooking the model up to an agent with a planner (preferably UCT).

- Compare my UCT implementation to Yavar's and make sure everything checks out.

- Write code to display the screens predicted by the model. This would allow me to play the game using only the model.

7/13/2011

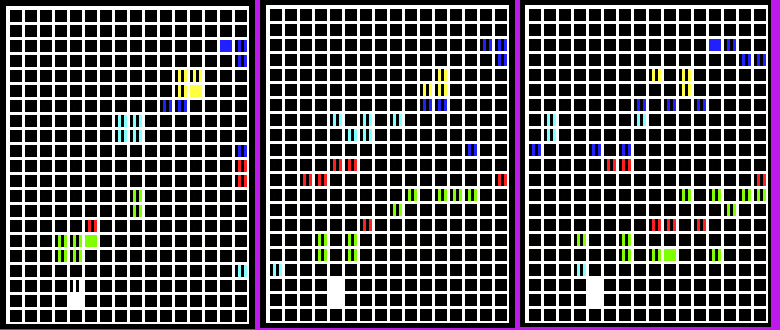

I have made much progress, but still there is far to go. The most major change was deciding to port my Python code into c++ to integrate better with the ALE package and to run faster. This has successfully been accomplished and the number of UCT steps I could run per iteration has increased from hundreds to tens of thousands. Currently, I'm at the crux of the project -- I need to come up with a good model to predict next states and rewards from the discretized pixel array. To start things off, I implemented a DBN (Dynamic Bayes Network) model, for which I manually provide the structure. This model has many interesting characteristics, but fails to deal adequately with objects such as cars which inhabit multiple contiguous blocks as shown below:

|

Because the model is trying to predict each pixel block independently of the surrounding block, we see the macro features of game screen become very strange. Cars break into many disparate blocks. While the model is statistically accurately predicting the output of each block, it has consideration of macroscopic features of the domain -- such as the fact that there is only one contiguous block of color on each row. Most of this is related to the problem of Synchronious arcs in DBNs.

9/9/2011

I have started using CRF to predict next pixel states. The following is the prediction accuracy for the game Freeway:

The following image shows the equivalent graph when the model is ungrounded in its predictions. That is to say that model is trying to imagine 1000 steps ahead. This is a harder task than the previous in which the model was asked to only make one state predictions. As we can see, CRF has perfect accuracy for certain amounts of training data, but less well for others. I will need to look into these performance variants in more detail.