Skim Chapter 28.2 of the Intel manual to familiarize yourself with low-level EPT programming. Several helpful definitions have been provided in vmm/ept.h.

Implement sys_ept_map in kern/syscall.c, as well as ept_lookup_gpa and ept_map_hva2gpa in vmm/ept.c. Once this is complete, you should have complete support for nested paging.

At this point, you have enough host-level support function to map the guest bootloader and kernel into the guest VM. You will need to read the kernel's ELF headers and copy the segments into the guest.

Implement copy_guest_kern_gpa() and map_in_guest() in user/vmm.c. For the bootloader, we use map_in_guest directly, since the bootloader is only 512 bytes, whereas the kernel's ELF header must be read by copy_guest_kern_gpa, which should then call map_in_guest for each segment.

Once this is complete, the kernel will attempt to run the guest, and will panic because asm_vmrun is incomplete.

Implementing vmlaunch and vmresume.

In this exercise, you will need to write some assembly to launch the VM. Although much of the VMCS setup is completed for you, this exercise will require you to use the vmwrite instruction to set the host stack pointer, as well as the vmlaunch and vmresume instructions to start the VM.

In order to facilitate interaction between the guest and the JOS host kernel, we copy the guest register state into the environment's Trapframe structure. Thus, you will also write assembly to copy the relevant guest registers to and from this trapframe struct.

Skim Chapter 26 of the Intel manual to familiarize yourself with the vmlaunch and vmresume instructions. Complete the assembly code in asm_vmrun in vmm/vmx.c.

Once this is complete, you should be able to run the VM until the guest attempts a vmcall instruction, which traps to the host kernel. Because the host isn't handling traps from the guest yet, the VM will be terminated.

Part 2: Handling VM exits

The equivalent event to a trap from an application to the operating system is called a VM exit. We have provided some skeleton code to dispatch the major types of exits we expect our guest to provide in the vmm/vmx.c function vmexit(). You will need to identify the reason for the exit from the VMCS, as well as implement handler functions for certain events in vmm/vmexits.c.

Similar to issuing a system call (e.g., using the int or syscall instruction), a guest can programmatically trap to the host using the vmcall instruction (sometimes called hypercalls). The current JOS guest uses three hypercalls: one to read the e820 map, which specifies the physical memory layout to the OS; and two to use host-level IPC, discussed below.

Multi-boot map (aka e820)

JOS is "told" the amount of physical memory it has by the bootloader. JOS's bootloader passes the kernel a multiboot info structure which possibly contains the physical memory map of the system. The memory map may exclude regions of memory that are in use for reasons including IO mappings for devices (e.g., the "memory hole"), space reserved for the BIOS, or physically damaged memory. For more details on how this structure looks and what it contains, refer to the specification. A typical physical memory map for a PC with 10 GB of memory looks like below.

e820 MEMORY MAP

address: 0x0000000000000000, length: 0x000000000009f400, type: USABLE

address: 0x000000000009f400, length: 0x0000000000000c00, type: RESERVED

address: 0x00000000000f0000, length: 0x0000000000010000, type: RESERVED

address: 0x0000000000100000, length: 0x00000000dfefd000, type: USABLE

address: 0x00000000dfffd000, length: 0x0000000000003000, type: RESERVED

address: 0x00000000fffc0000, length: 0x0000000000040000, type: RESERVED

address: 0x0000000100000000, length: 0x00000001a0000000, type: USABLE

For the JOS guest, rather than emulate a BIOS, we will simply use a vmcall to request a "fake" memory map.

Complete the implementation of vmexit() by identifying the reason for the exit from the VMCS. You may need to search Chapter 27 of the Intel manual to solve this part of the exercise.

Implement the VMX_VMCALL_MBMAP case of the function handle_vmcall() in vmm/vmexits.c. Also, be sure to advance the instruction pointer so that the guest doesn't get in an infinite loop.

Once the guest gets a little further in boot, it will attempt to discover whether the CPU supports long mode, using the cpuid instruction. Our VMCS is configured to trap on this instruction, so that we can emulate it---hiding the presence of vmx, since we have not implemented emulation of vmx in software.

Once this is complete, implement handle_cpuid() in vmm/vmexits.c.

When the host can emulate the cpuid instruction, your guest should run until it attempts to perform disk I/O.

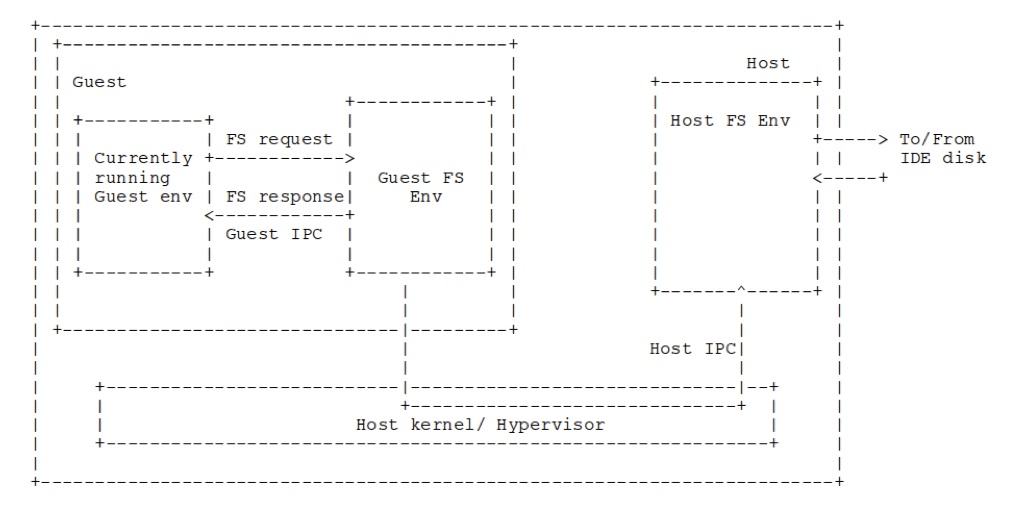

JOS has a user-level file system server daemon, similar to a microkernel. We place the guest's disk image as a file on the host file system server. When the guest file system daemon requests disk reads, rather than issuing ide-level commands, we will instead use vmcalls to ask the host file system daemon for regions of the disk image file. This is depicted in the image below.

Once this is completed, you need to modify the bc_pgfault amd flush_block in fs/bc.c to issue I/O requests using the host_read and host_write hypercalls. Use the macro VMM_GUEST to select different behavior for the guest and host OS. You will also have to implement the IPC send and receive hypercalls in handle_vmcall, as well as the client code to issue ipc_host_send and ipc_host_recv vmcalls in lib/ipc.c.

Finally, you will need to extend the sys_ipc_try_send to detect whether the environment is of type ENV_TYPE_GUEST or not, and you also need to implement the ept_page_insert function.

Once these steps are complete, you should have a fully running JOS-on-JOS.

Grading

Your code will be tested on proper functioning of extended page table mapping, vmlaunch and resume and correct handling of vmcalls during vm exit.

Add the following lines at the end of the file kern/syscall.c . This is required to test the ept map functionality.

#ifdef TEST_EPT_MAP

int

_export_sys_ept_map(envid_t srcenvid, void *srcva,

envid_t guest, void* guest_pa, int perm)

{

return sys_ept_map(srcenvid, srcva, guest, guest_pa, perm);

}

#endif

After adding this, you can run the gradescripts.

Grade Scripts can be found here.

Download both executables and run python gradeproject.pyc

from the root directory of the project.