SCAND

[Paper] [Video] [Dataset]

About

Have you wondered how autonomous mobile robots should share space with humans in public spaces? Are you interested in developing autonomous mobile robots that can navigate within human crowds in a socially compliant manner? Do you want to analyze human reactions and behaviors in the presence of mobile robots of different morphologies?

If so, we introduce to you Socially CompliAnt Navigation Dataset (SCAND). Our dataset contains 25 miles and 8.7 hours of robot driven trajectories through a variety of social environments around the University of Texas at Austin campus.

SCAND also contains:

- 138 trajectories

- 15 days of social navigation data on 2 robots : A wheeled Clearpath Jackal and a legged Boston Dynamics Spot

- Indoor and outdoor environments @ UT Austin campus

- 2 highly crowded football game days (including a concert at the same time!)

- Mild to heavily crowded environments

- 4 different human demonstrators

Data Collection

In a busy outdoor/indoor environment, the human expert teleoperates the robot, moving among other pedestrians in a socially compliant manner:

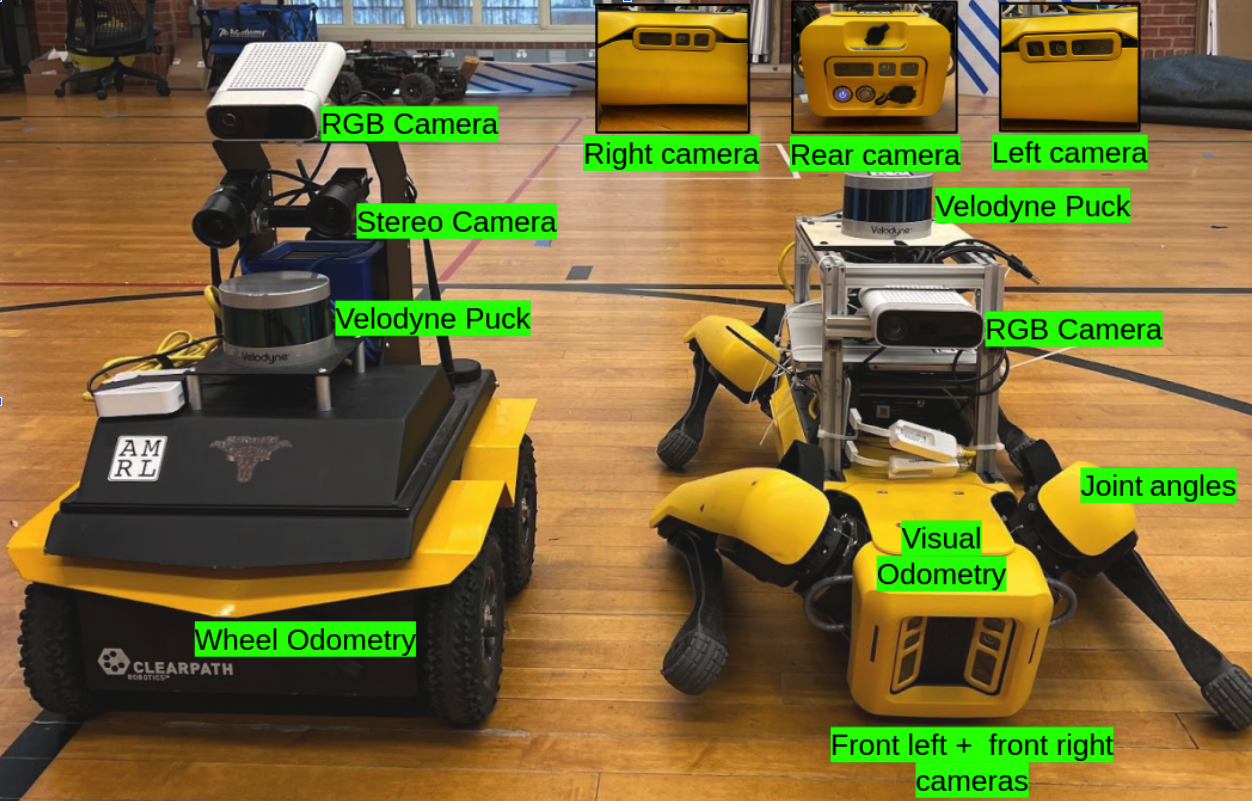

Data from each trajectory is collected by the variety of sensors on the Jackal and Spot:

Here is an example of an outdoor data collection run in Speedway, UT Austin:

The above trajectory shows the 5 monocular videos taken from around the body of Spot, the RGB video from the Azure Kinect Camera, and a 3D point cloud captured by the Velodyne Lidar Puck.

Example Usage

To show an example of how this dataset can be used, we train a behavior cloning (BC) agent to imitate the socially compliant navigation demonstrations in SCAND.

We then compare this learned agent with ROS move_base in real-world trials using human subjects in 2 scenarios:

- Static trial: the human is stationary and the robot navigates around the human.

- Dynamic trial: the human and the robot move past each other and reach where the other started.

Static Trails

In this trail, the human is standing in the center of the room, and the robot has to navigate to a goal positioned behind the human.

The move_base agent does reach the goal but travels very close to the human participant and is not very socially compliant:

The BC agent trained from SCAND also gets to the goal point behind the human participant, but unlike move_base, it navigates around the participant with plenty of distance:

Dynamic Trials

In this trail, the human participant and the robot move past each other and reach where the other started walking from.

Once again, the move_base agent does reach the goal but travels very close to the human, creating an awkward scenario:

The BC agent trained from SCAND also gets to the goal point behind the human participant but oncea gain navigates around the participant with plenty of distance:

We also deployed our BC agent in the wild on Speedway in the UT Austin Campus:

The BC agent avoids the other pedestrians in a socially compliant manner, avoids the construction area, and even avoids vehicles.

Research Using SCAND

- Shah, D., Sridhar, A., Bhorkar, A., Hirose, N. and Levine, S., 2022. GNM: A General Navigation Model to Drive Any Robot. arXiv preprint arXiv:2210.03370.

- Li, H., Li, Z., Akmandor, N.U., Jiang, H., Wang, Y. and Padir, T., 2022. StereoVoxelNet: Real-Time Obstacle Detection Based on Occupancy Voxels from a Stereo Camera Using Deep Neural Networks. arXiv preprint arXiv:2209.08459.

- Yildirim, Y. and Ugur, E., 2022. Learning Social Navigation from Demonstrations with Conditional Neural Processes. arXiv preprint arXiv:2210.03582.

- de Heuvel, J., Corral, N., Kreis, B. and Bennewitz, M., 2022. Learning Depth Vision-Based Personalized Robot Navigation From Dynamic Demonstrations in Virtual Reality. arXiv preprint arXiv:2210.01683.

Citing SCAND

If you find SCAND useful for your research, please cite the following paper:

title = {Socially CompliAnt Navigation Dataset (SCAND): A Large-Scale Dataset Of Demonstrations For Social Navigation},

author = {Karnan, Haresh and Nair, Anirudh and Xiao, Xuesu and Warnell, Garrett and Pirk, S{\"o}ren and Toshev, Alexander and Hart, Justin and Biswas, Joydeep and Stone, Peter},

journal={IEEE Robotics and Automation Letters},

year = {2022},

organization = {IEEE}

}

author = {Karnan, Haresh and Nair, Anirudh and Xiao, Xuesu and Warnell, Garrett and Pirk, Soeren and Toshev, Alexander and Hart, Justin and Biswas, Joydeep and Stone, Peter},

publisher = {Texas Data Repository},

title = {{Socially Compliant Navigation Dataset (SCAND)}},

year = {2022},

version = {DRAFT VERSION},

doi = {10.18738/T8/0PRYRH},

url = {https://doi.org/10.18738/T8/0PRYRH}

}

Links

- Dataset on Texas Data Repository (recommended)

- Dataset on Google Sheet (deprecated soon)

Contact

For questions, please contact:

Dr. Xuesu Xiao

Department of Computer Science

George Mason University

4400 University Drive MSN 4A5, Fairfax, VA 22030 USA

xiao@gmu.edu