Subsection 12.2.1 A simple model of the computer

¶The following is a relevant video from our course "LAFF-On Programming for High Performance" (Unit 2.3.1).

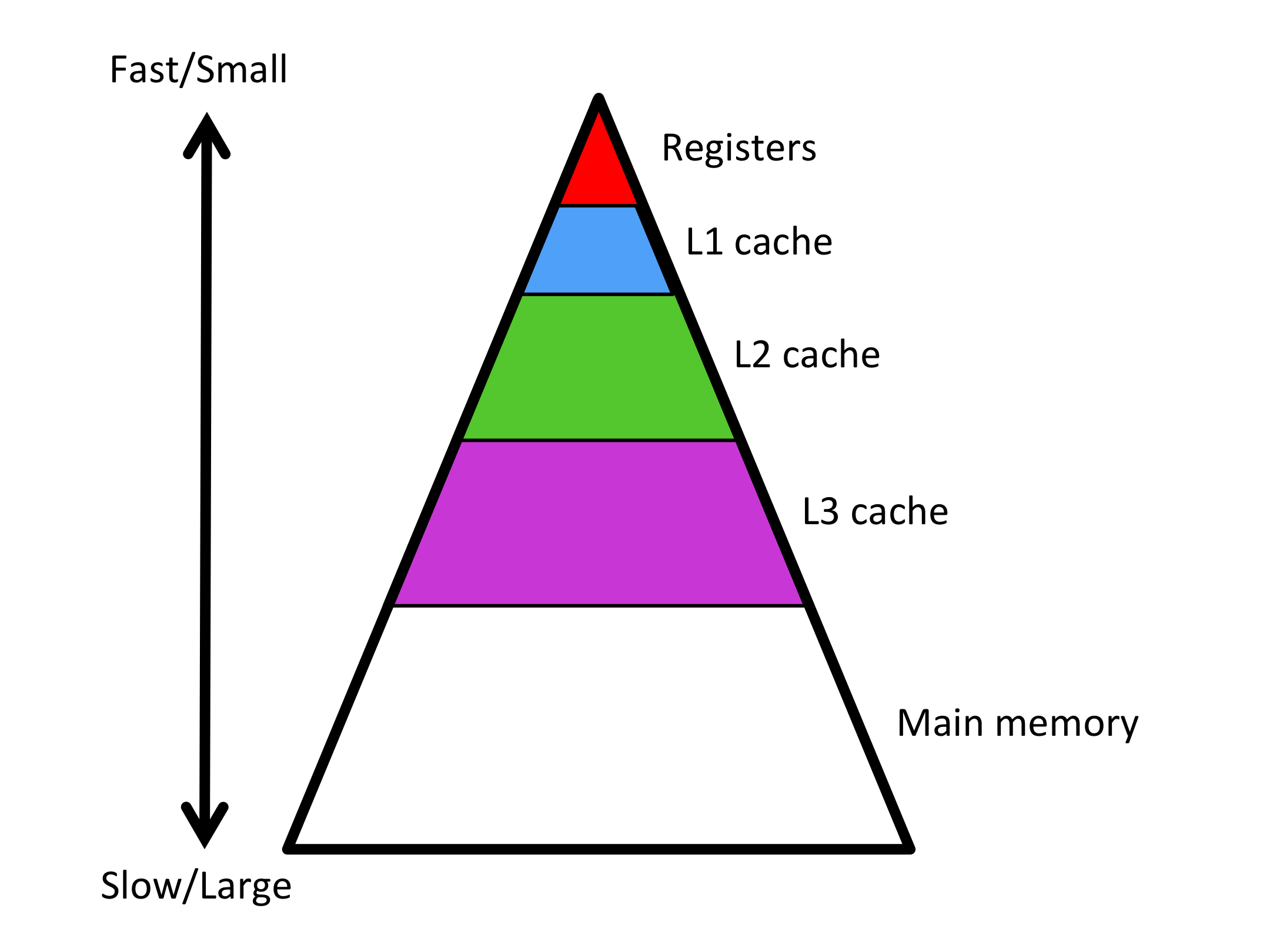

The good news about modern processors is that they can perform floating point operations at very high rates. The bad news is that "feeding the beast" is often a bottleneck: In order to compute with data, that data must reside in the registers of the processor and moving data from main memory into a register requires orders of magnitude more time than it does to then perform a floating point computation with that data.

In order to achieve high performance for the kinds of operations that we have encountered, one has to have a very high-level understanding of the memory hierarchy of a modern processor. Modern architectures incorporate multiple (compute) cores in a single processor. In our discussion, we blur this and will talk about the processor as if it has only one core.

It is useful to view the memory hierarchy of a processor as a pyramid.

Ponder This 12.2.1.1.

For the processor in your computer, research the number of registers it has, and the sizes of the various caches.