|

Atari Video Game Learning

Project Notebook

Goals

- Create an agent general enough to play various Atari 2600 console games without requiring specific re-tuning of parameters or specialized features.

- The agent should ideally be able to learn from standard low-level state representations such as raw screen pixels or console memory.

Methodology

We aim to create model-based reinforcement learning methods which, when working in conjunction with conjunction with known planning algorithms such as UCT, we expect to perform well on various games.Challenges

- Non-markovian states: The game screen of many Atari games is often non-markov.

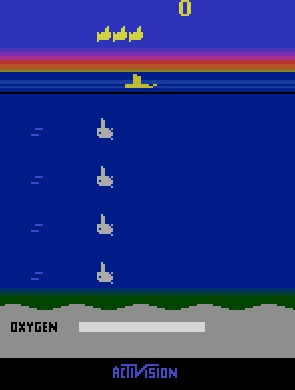

- Pixel dependencies: Most model learning algorithms assume that a pixel in the current state is dependent only on pixels in the previous state. This assumption hurts Atari games. Consider the following example, in Sequest, fish and submarines spawn randomly from the left and right sides of the screen. The model learning algorithm will not be able to predict such spawn (since they random), thus in a given block of pixels on the left or right side of the screen, there is some probability that each pixel will turn gray, indicating a submarine has spawned. The issue is that we want all pixels in a certain area to turn gray or none of them. This requires each pixel to be conditioned on its neighbors in the current screen, not just the previous screen.