Section 5.2 Talks to be scheduled

-

Devin Matthews

Southern Methodist University

Title: Exploring What is Possible with BLISAbstract:

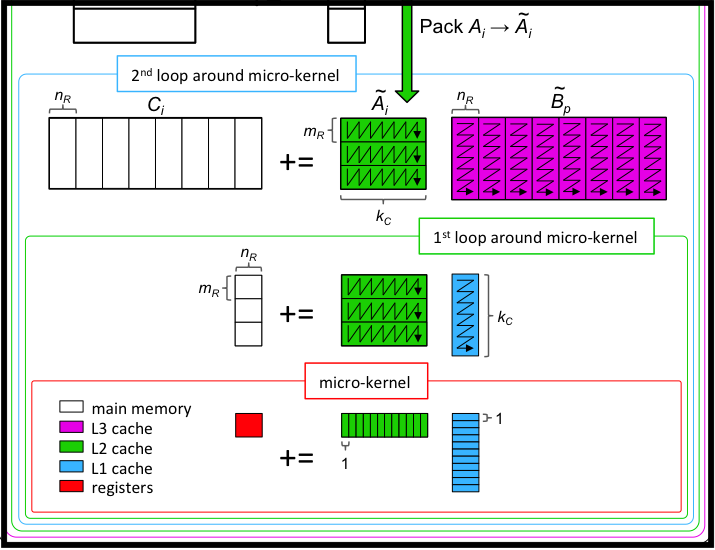

BLIS is much more than just a BLAS implementation. Numerous intellectual and technical innovations within the BLIS framework make it possible to instantiate a far wider range of operations, and to (re-)combine algorithmic pieces in myriad ways without a combinatorial explosion of complexity or effort. In this talk, I discuss the nuts and bolts of how BLIS does and will continue to enable such a diverse repertoire of functionality, as well as some ideas for and potential issues in further development.

-

RuQing Xu

The University of Tokyo

Title: GEMMFIP: Unifying GEMM in BLISRelated papers:

Towards a Unified Implementation of GEMM in BLIS 1 presented at ICS 2023.

Arxiv: GEMMFIP: Unifying GEMM in BLIS 2 .

-

Joe Dobson

Arm

Title: Exploring What is Possible with BLISAbstract:

Field Van Zee

The University of Texas at Austin

Title: Ask me anything-

Devangi Parikh and Greg Henry

The University of Texas at Austin and Intel

Tentative: Updates on casting higher precision in lower precisionRelated paper: Cascading GEMM: High Precision from Low Precision 3

-

Vijay Thakkar

NVIDIA and GATech

Title (tentative): A Generalized Micro-kernel Abstraction for GPU Linear AlgebraRelated software:

https://github.com/nvidia/cutlass. Collaborative work with Cris Cecka. Marat Dukhan

Google

Title: BLIS for the Web: 2023 editionRodrigo Brandao

UT Austin

Topic: Updates on Practical Strassen's AlgorithmsJohannes Dieterich

AMD (Austin)

Title:Harihara Sudhan

AMD (India)

L1 and L2 API OptimizationsEleni Vlachopoulou

AMD (India)

Performance improvements of NRM2Meghana Vankadari

AMD (India)

AVX-512 optimizations for BLIS Level-3 routinesEdward Smyth

AMD (India)

AOCL BLIS framework changesMithun Mohan

AMD (India)

Low Precision GEMM-

Upasana Sridhar

Carnegie Mellon University

An introduction to the SMaLL Framework for ML librariesAbstract: We describe the SMaLL framework, a framework for rapidly developing high performance ML libraries for CPU-based platforms. We adopt a similar approach to BLIS by restricting the design effort to only a small set of kernels via a standard loop nest bodies. This allow us to target new hardware rapidly and avoids the overheads associated with translating ML primitives to linear algebra.

Robert van de Geijn

The University of Texas at Austin

Title: Applying FLAME to the \(LTL^T \) factorization with pivotingAbstract: Given a skew-symmetric matrix \(X \text{,}\) the computation of its \(L T L^T \) with pivoting is an operation of interest to quantum physicists. This operation is of interst to the BLIS community because it illustrates the benefits of a more flexible BLAS layer like BLIS. Known and new algorithms require operations like skew-symmetric rank-2 and rank-2k update, and GEMMT updating only the strictly-lower triangular part of the result matrix. Of interest to the FLAME community are a number of surprising new algorithm.

Collaborative work with Maggie Myers, Devin Matthews, RuQing Xu, Ishna Satyarth, Chao Yin, and others

A draft of this paper is available upon request.

https://dl.acm.org/doi/abs/10.1145/3577193.3593707https://arxiv.org/abs/2302.08417https://arxiv.org/abs/2303.04353