Section 5.3 Friday Sept. 23

Subsection 5.3.1 Friday 8:30 - 9:00: Coffee and muffins

Subsection 5.3.2 Friday 9:00 - 10:30 Session 5

Subsubsection 5.3.2.1 GEMM SUP Optimizations

Mithun Mohan, co-author Bhaskar NallaniAMD (India)

Abstract

Optimizations targeting multi-threaded GEMM SUP path, with emphasis on removing scaling bottlenecks.

Subsubsection 5.3.2.2 Efficient TRSM Implementation for Small Dimensions

Bhaskar Nallani, co-author Satish NugguAMD (India)

Abstract

This approach gave the best TRSM performance for small sizes by reducing packing overhead, efficiently using prefetching and diagonal element packing.

Subsubsection 5.3.2.3 Automatic Generation of a Family of Matrix Multiplication Routines with Apache TVM

Adrián CastellóUniversitat Politecnica de Valencia

Abstract:

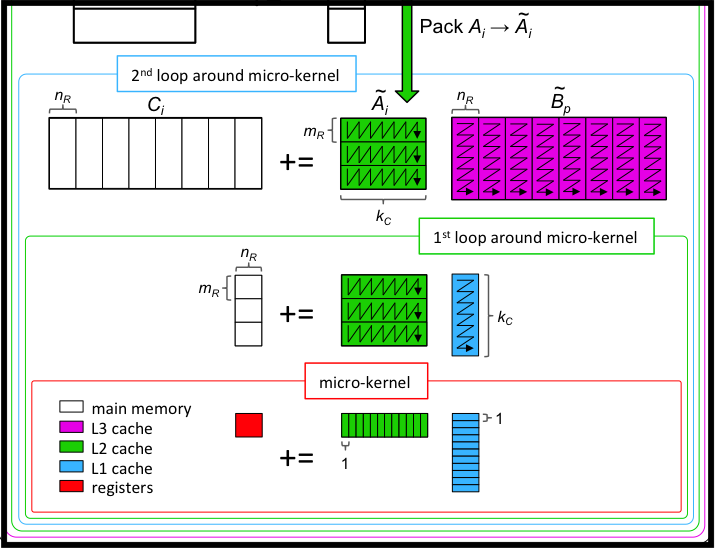

We explore the utilization of the Apache TVM open source framework to automatically generate a family of algorithms that mimic the approach taken by popular linear algebra libraries, such as GotoBLAS2, BLIS and OpenBLAS, in order to obtain a blocked formulation of the general matrix multiplication (gemm). In addition, we fully automatize the generation process, by also leveraging the Apache TVM framework to derive an ample variety of the internal micro-kernels for gemm. This is in contrast with the convention in high performance libraries, which hand-encode a single micro-kernel per architecture, in general using assembly. In global, the combination of our TVM-generated blocked algorithms and micro-kernels for gemm 1) im- proves portability, maintainability and, globally, the software life cycle; 2) provides high flexibility to be easily tailored and optimized to different data types, processor architectures, and gemm shapes; and 3) features a minimal memory footprint. The approach thus provides a solution that, for example, is especially appealing for deep learning tasks in edge devices.

Subsection 5.3.3 Friday 10:30-10:45 Break

Subsection 5.3.4 Friday 10:45 - 12:15 Session 6

Subsubsection 5.3.4.1 Programming Parallel Dense Matrix Factorizations (and Inversion) for New-Generation NUMA Architectures

Sandra CatalánUniversidad Complutense de Madrid

Abstract:

We address the programmability complexity derived from the emergence of new-generation shared-memory NUMA architectures employing dense matrix factorizations and matrix inversion (DMFI) as a use case.

Our methodology pursues performance portability across different NUMA configurations by proposing multi-domain implementations for DMFI plus a hybrid task- and loop-level parallelization that configures multi-threaded executions to fix core-to-data binding, exploiting locality while maintaining the ease of development usually associated with shared-memory programming. In addition, we introduce a generalization of the multi-domain implementations for DMFI that offers support for virtually any NUMA topology in present and future architectures.

Our experimentations of the DMFI routines on two modern architectures (AMD Rome and Huawei Kunpeng 920), with configurable NUMA topologies, reports performance across architectures and inter- and intra-socket NUMA configurations competitive with state-of-the-art message-passing implementations.

Subsubsection 5.3.4.2 Portable code generation and semi-automatic scheduling for BLIS microkernels with Exo

Julian Bellavita and Grace DinhUC Berkeley

Subsubsection 5.3.4.3 Optimizing BLIS for a NUMA architecture: A glimpse of the coming NUMApocalypse

Leick RobinsonOracle

Abstract

As Moore’s Law approaches its useful limit, chip manufacturers are increasingly turning to higher core counts to achieve greater processing power. This, along with increasing demands for more memory, leads to a natural intermingling of cores and memory in ever more skewed NUMA architectures.

This presentation takes a rapid-fire, visually rich journey through our explorations at Oracle into bringing out the best performance from BLIS on one such architecture, the 160-core Ampere Altra, as well as how this can guide and inform the future of BLIS in an increasingly NUMA landscape.

Subsection 5.3.5 12:15 - ???? Wrap Up

slides/BLIS_Retreat_AMD_GEMM_SUP_Optimizations_MithunAndBhasker.pdfslides/BLIS_Retreat_AMD_TRSM_Small_BhaskerAndSatish.pdfslides/Adrian_slides.pdfslides/Julian_slides.pdfslides/BLISandtheNUMApocalypse_static.pdf