Section 5.2 Thursday Sept. 22

Subsection 5.2.1 Thursday 8:30 - 8:55: Coffee and muffins

Subsection 5.2.2 Thursday 8:55 - 9:00 Welcome by Robert van de Geijn

Subsection 5.2.3 Thursday 9:00 - 11:00 Session 1

Subsubsection 5.2.3.1 Exploring what is possible with BLIS

Devin MatthewsSouthern Methodist University

Abstract:

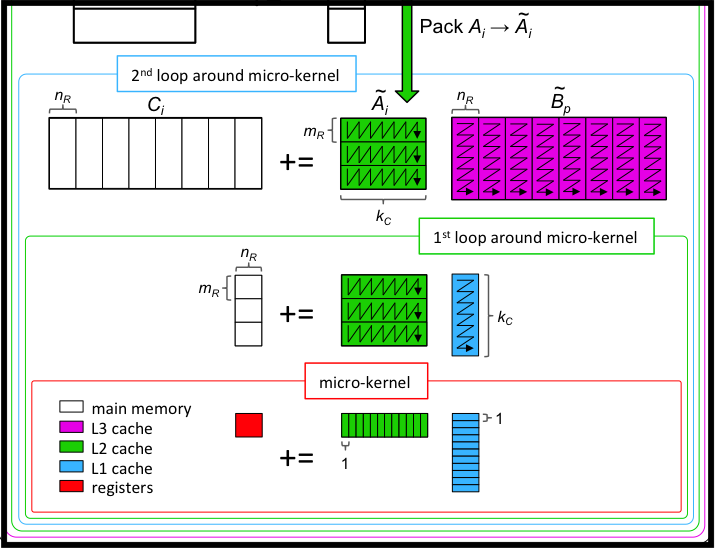

BLIS is much more than just a BLAS implementation. Numerous intellectual and technical innovations within the BLIS framework make it possible to instantiate a far wider range of operations, and to (re-)combine algorithmic pieces in myriad ways without a combinatorial explosion of complexity or effort. In this talk, I discuss the nuts and bolts of how BLIS does and will continue to enable such a diverse repertoire of functionality, as well as some ideas for and potential issues in further development.

Subsubsection 5.2.3.2 AMD’s Contribution to BLIS

Meghana Vankadari, co-author Kiran VaragantiAMD (India)

Overview of various features, new API and optimizations added by AMD in the BLIS.

Subsubsection 5.2.3.3 User Mode Profiler for BLIS (DTL)

Dipal M ZambareAMD (India)

Abstract

New Debug and Trace feature is added in AOCL-BLIS to perform Application profiling and debugging. This presentation explains various features and their usages.

Subsubsection 5.2.3.4 Multithreading SGEMV

Harihara Sudhan, co-author Bhaskar NallaniAMD (India)

Abstract

Parallelizing Level 2 routines efficiently by predicting the optimal number of thread required based on input dimension.

Subsection 5.2.4 Thursday 11:00-11:15 Break

Subsection 5.2.5 Thursday 11:15 - 12:45 Session 2

Subsubsection 5.2.5.1 Ask me anything

Field Van ZeeThe University of Texas at Austin

We can share a link to the video upon request. (Send mail to rvdg@cs.utexas.edu).

Subsubsection 5.2.5.2 Towards BLAS 3 robust solvers in LAPACK

Angelika SchwarzIntel

Abstract:

The computation of eigenvectors and condition number estimation require the solution of triangular system which are known to be prone to floating-point overflow. To avoid overflow, LAPACK contains a set of robust BLAS-2-based solvers. The solvers use dynamic scaling to avoid the introduction of Infinity into the solution. Recently, BLAS-3 version of these solvers have been devised. This presentation gives an overview of the progress of integrating the BLAS-3 solvers into LAPACK.

Subsubsection 5.2.5.3 Libflame -no more "0 users, 0 complaints"

Robert van de GeijnThe University of Texas at Austin

Abstract:

There has been a renewed interest in a previous project of ours, which resulted in another software artifact: the libflame library. We briefly review the foundational research behind the effort, how it relates to BLIS, and the state of the library.

This talk will be short and is meant to instigate a discussion of what efforts are being undertaken outside our core team and how this can be coordinated.

Subsection 5.2.6 Thursday 12:45 - 1:45 Lunch

Subsection 5.2.7 Thursday 1:45 - 3:15 Session 3

Subsubsection 5.2.7.1 Accelerators in FLAMEs

Johannes DieterichAMD (Austin)

Subsubsection 5.2.7.2 The new performance landscape of finite element methods for fluids and structures

Jed BrownUniversity of Colorado at Boulder

Subsubsection 5.2.7.3 Tensor-Times-Vector: a Use-Case for "Loop-over-BLIS"

Cem BassoyTechnical University of Hamburg

Subsection 5.2.8 Thursday 3:15- 3:30 Break

Subsection 5.2.9 Thursday 3:30 - 5:00 Session 4

Subsubsection 5.2.9.1 A case for BLIS for X

Tze Meng LowCarnegie Mellon University

Subsubsection 5.2.9.2 Cascading GEMM

Devangi Parikh and Greg HenryThe University of Texas at Austin

Abstract

In this talk, we will discuss the opportunities for implementing higher-precision matrix-matrix multiplication (GEMM) using lower-precision high-performance GEMM. We illustrate these ideas using double-double precision (FP64x2) GEMM as an example. We leverage the BLIS framework to approximate FP64x2 GEMM accuracy which can be cast in terms of ten FP64 GEMMs by cascading the input matrices into four FP64 matrices. We show results that represent significant improvements over previous years, for both performance and accuracy, on both well-conditioned and ill-conditioned matrices.

Subsubsection 5.2.9.3 Exception handling for the BLAS and LAPACK

Weslley da Silva Pereira(Collaborative work with Julien Langou and Jim Demmel)

University of Colorado Denver

Abstract:

Numerical exceptions, which may be caused by overflow, operations like division by 0 or sqrt(-1), or convergence failures, are unavoidable in many cases, in particular when software is used on unforeseen and difficult inputs. As more aspects of society become automated, e.g., self-driving cars, health monitors, and cyber-physical systems more generally, it is becoming increasingly important to design software that is resilient to exceptions, and that responds to them in a consistent way. Consistency is needed to allow users to build higher-level software that is also resilient and consistent (and so on recursively). In this talk, we explore the design space of consistent exception handling for the widely used BLAS and LAPACK linear algebra libraries, pointing out a variety of instances of inconsistent exception handling in the current versions, and propose a new design that balances consistency, complexity, ease of use, and performance. Some compromises are needed, because there are preexisting inconsistencies that are outside our control, including in or between existing vendor BLAS implementations, different programming languages, and even compilers for the same programming language. And user requests from our surveys are quite diverse. We also propose our design as a possible model for other numerical software, and welcome comments on our design choices.

slides/BLIS_Retreat_AMD_Contribution_To_BLIS_MeghanaAndKiran.pdfslides/BLIS_Retreat_AMD_AOCL_DTL_Dipal.pdfslides/BLIS_Retreat_AMD_Multithreading_SGEMV_HariAndBhasker.pdfslides/Angelika_slides.pdfslides/Robert_slides.pdfslides/blis_retreat_Johannes.pdfhttps://arxiv.org/abs/2204.01722slides/Cem_slides.pdfslides/Devangi_slides.pdfslides/Weslley_slides.pdf