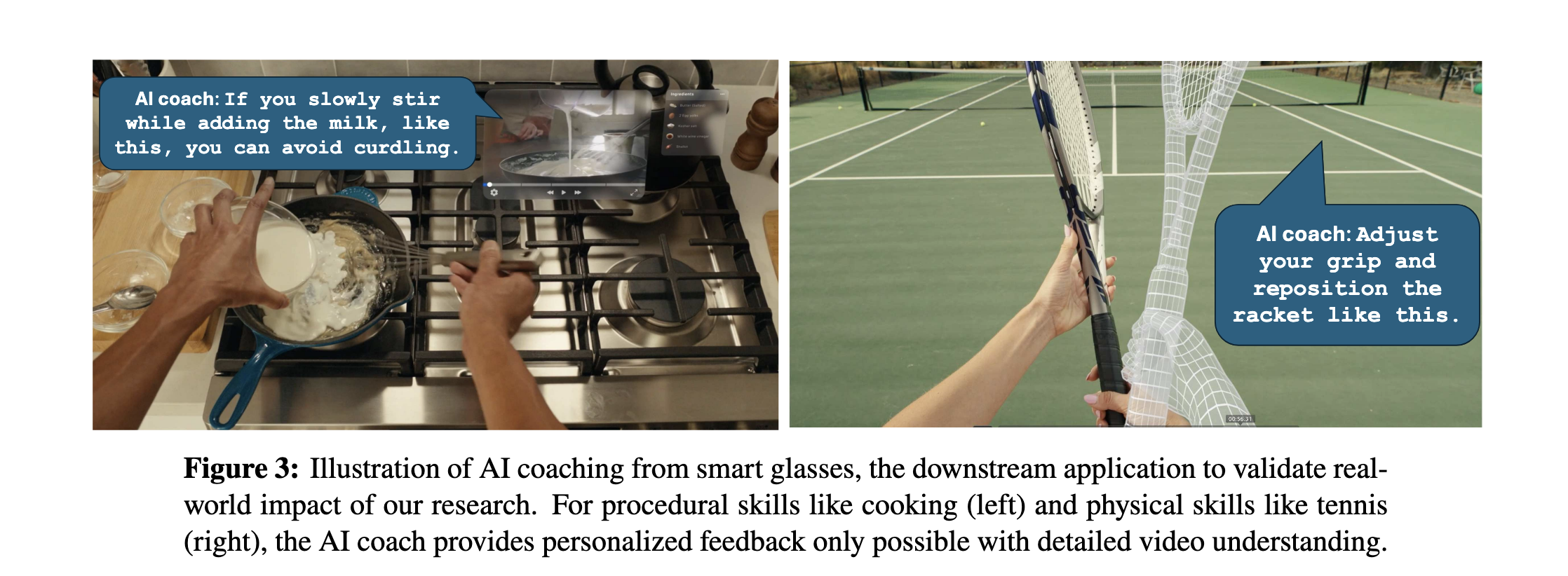

Imagine having a pair of smart glasses that don’t just record what you see, but truly understand it. Maybe you’re DIYing a leaky faucet, improving your tennis swing, or rehabbing a shoulder injury, and there’s an AI expert guiding you with real-time, personalized feedback. This is the vision of researcher Kristen Grauman, a professor in the Department of Computer Science at The University of Texas at Austin who was recently awarded the prestigious Hill Prize in Artificial Intelligence for a groundbreaking research project that aims to transform AI from a passive observer into an active, skill-building coach.

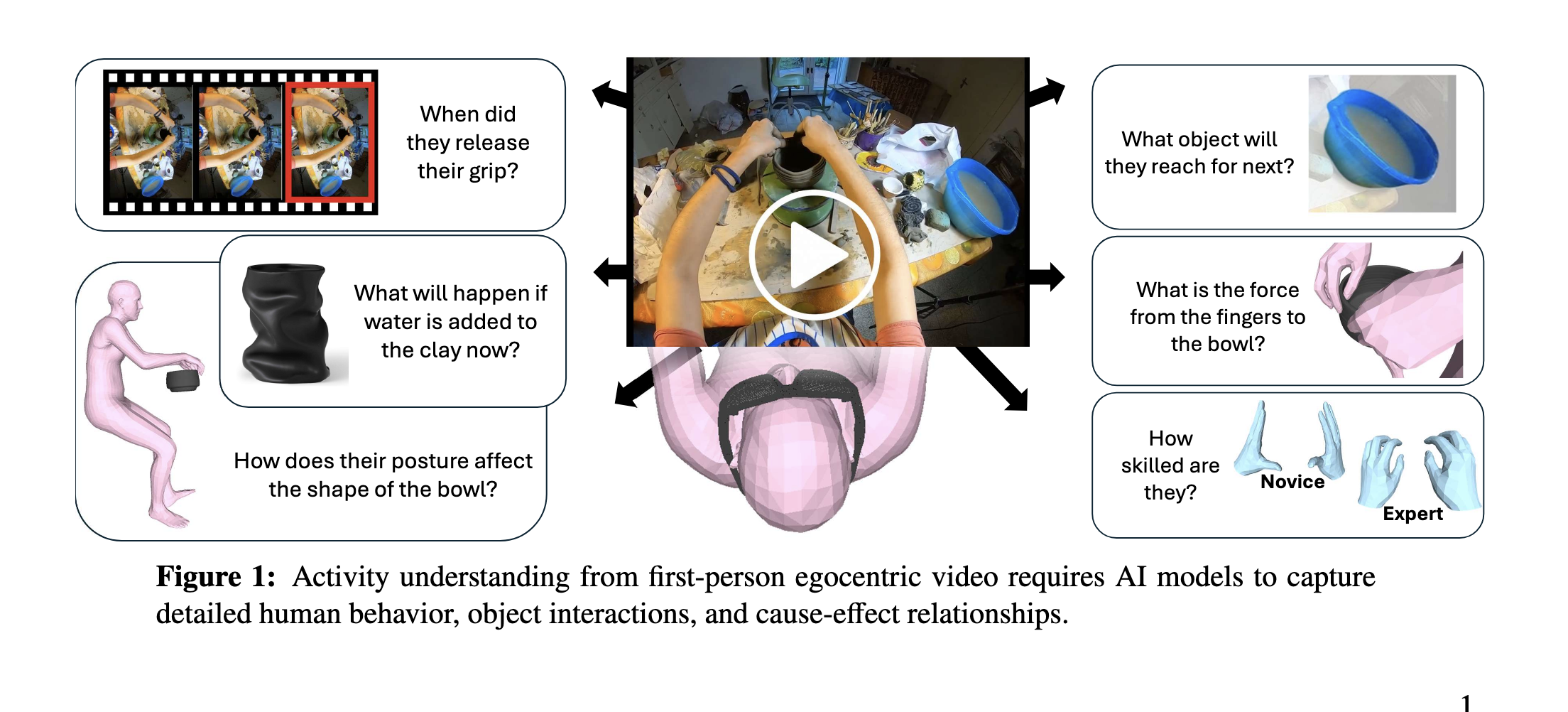

Professor Grauman’s research in computer vision and machine learning focuses on video, visual recognition, and action for perception or embodied AI. The Hill Prizes are awarded to researchers accelerating high-risk and high-impact science and innovation. Dr. Grauman’s research addresses the complex challenge of teaching AI to interpret human activity from first‑person video—a key step toward creating intelligent systems that can actively support people in everyday life.

Artificial Intelligence has transformed our world through its ability to process language and recognize static objects. But as Grauman points out, words have their limits, and true understanding of human activity goes well beyond verbal description and linguistic reasoning. Teaching someone to tie their shoes or anticipate a tennis serve through text alone is nearly impossible; it requires an understanding of movement, timing, and three-dimensional space. Video offers an irreplaceable channel for real-world dynamics, subtle cause and effect, physical laws, behaviors and intentions. Yet today’s computer vision models struggle to deeply understand video. At the core of this new research is "egocentric" vision. While most AI is trained on "disembodied" internet footage—third-person videos taken from a distance—Grauman’s team will focus on first-person video captured from wearable devices such as smart glasses. If an AI agent sees what you see, it can understand how you manipulate objects and interact with your environment in real-time.

The technical hurdles of this research project are significant as current AI models often struggle to tell if a given video is playing forwards or backwards, often “hallucinating” explanations based on what they expect to see rather than what is actually happening. To solve this, Grauman and her research team are building 4D systems that are grounded in time and space, can reason over time, map 3D environments and track the precise, millimetric movements of human hands.

The project brings together a multidisciplinary research team that includes 4D human body understanding expert Georgios Pavlakos (UT Austin) and human-computer interaction specialist Amy Pavel (UC Berkeley) to move beyond language-centric AI to create machines that truly understand the physical world. Collaborating with Travis Vlantes (UT Applied Sports Science) and Prof. Hao-Yuan Hsiao (UT Kinesiology and Health Education), the team aims to enhance elite athlete training and develop wearable feedback for rehabilitation exercises.

Ultimately, AI coaches can have untold real-world impact as this game-changing technology could ensure every individual access to a personalized coach that would help them improve their cooking, playing, shooting, serving – you name it. At-home adherence to physical therapy exercises would increase significantly, improving rehab and prolonging quality of life; people with developmental disabilities could have augmented vocational training and autonomy in a broader set of jobs; and our mental load of performing everyday tasks would be lightened and perhaps met with an encouraging “Finish strong!” for extra support.