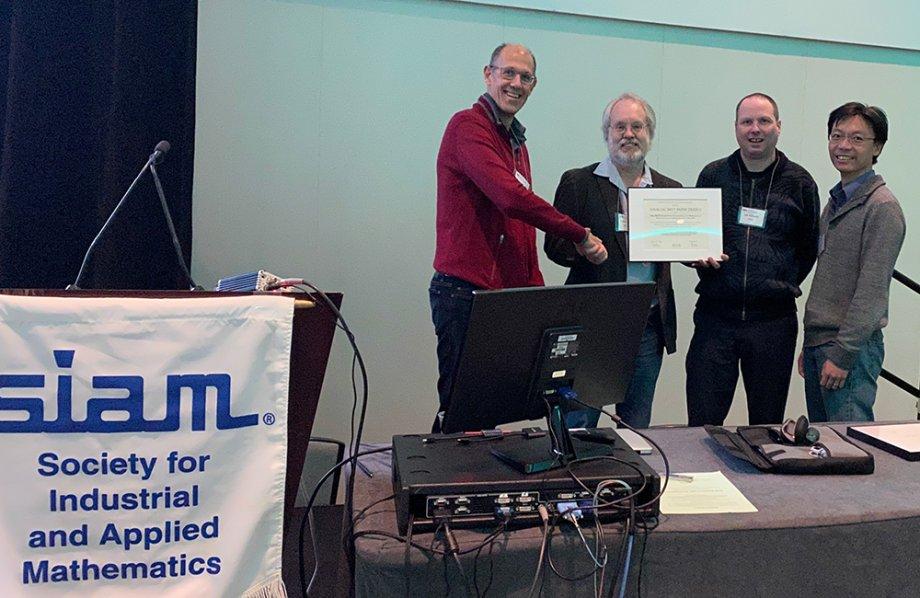

"Robert van de Geijn, Lee Killough, and Tze Meng Low accepting the award at PP20 in Seattle, Washington

The paper titled “The BLIS Framework: Experiments in Portability” recently received the 2020 SIAM Activity Group on Supercomputing Best Paper Prize. Among the authors of this paper are TXCS professor Dr. Robert van de Geijn, as well as TXCS graduates Field Van Zee, Tyler Smith, Bryan Marker, Tze Meng Low, John Gunnels, and Mike Kistler.

The prize is awarded every two years to the author(s) of the most outstanding paper, as determined by the selection committee, in the field of parallel scientific and engineering computing published within the four calendar years preceding the award year.

The paper in question discusses early results for the BLAS-like Library Instantiation Software (BLIS) framework, which is a dense linear algebra (DLA) toolbox. According to Dr. van de Geijn, DLA functions reside “at the bottom of the food chain” for many applications in computational science, biology, physics, engineering, and various other related fields. These applications rely on common functions that express computation in terms of matrices and vectors. The most computationally intensive of these dense linear algebra functions is matrix-matrix multiplication.

The importance of optimizing these calculations was recognized in the 1970s, prompting key community members to propose the Basic Linear Algebra Subprograms (BLAS). By providing a common interface, the BLAS allowed portable, high-performance matrix computation to become more widely accessible and achievable. Several notable implementations of the BLAS have been developed over the decades, a significant one among them being GotoBLAS, which was written by Kazushige Goto at the Texas Advanced Computing Center. Goto approached matrix-matrix operations by structuring these implementations using carefully optimized “kernels” written in assembly language. Because the matrix multiplication algorithm employs this kernel within multiple nested loops, any small inefficiencies within the kernel are magnified to result in a noticeable drop in performance. Therefore, these kernels must be highly streamlined.

The BLIS team understood what GotoBLAS did well and where it fell short. While the algorithm employed by Goto was sound, the library used a "large kernel" design that expressed the algorithm's three innermost loops of computation in terms of low-level assembly code. This was problematic for multiple reasons. The monolithic nature of the kernels resulted in the need for multiple similar yet distinct kernels in order to support other common variations of matrix multiplication, the size and complexity of each kernel required significant time, effort, and expertise to develop and maintain, and the two innermost loops were not eligible for being parallelized within a higher-level construct, which limited the opportunities for obtaining scalable performance on multithreaded architectures.

When BLIS architect Field Van Zee began the development effort in 2012, he realized that much of GotoBLAS could be refactored and simplified. By pushing the boundary between the higher-level C code and the low-level assembly code further down, the assembly region of the algorithm was limited to the single innermost loop— what they call the “microkernel.” This function is quite small, making it much easier to write and maintain. “It’s easy enough to explain in an undergraduate classroom setting," says Van Zee. Designing BLIS around a microkernel also meant that all of the differences between the various matrix kernels in GotoBLAS fell away. With fewer, smaller kernels and more of the algorithm expressed in a portable language such as C, the developers of BLIS were able to port BLIS to existing and new microarchitectures with relative ease.

This new microkernel structure also paved the way for another early BLIS developer, Tyler Smith, to develop a framework for expressing multicore parallelism. BLIS's refactoring exposed two additional loops that could be parallelized. According to Van Zee, “We had extra knobs and levers that we could pull to explore how to get the best performance on these really powerful computers.” In this way, the team was able to achieve extremely high performance that took advantage of the dozens (and sometimes hundreds) of cores present on modern processors.

The paper that won the team the SIAM prize demonstrates the portability benefits of BLIS on a wide range of architectures. It also shows that, thanks to BLIS' careful redesign, scalable parallelism on multicore and many-core architectures can be easily achieved. “This research delivers some of the fundamental software used for scientific discovery through computing,” says Dr. van de Geijn. “[It] enables advances that touch our everyday lives.”

When asked about the future, the team says that they’re very excited that certain Linux distributions are starting to integrate BLIS. The BLIS framework is now available in Debian Unstable, a variant of Linux whose packages eventually feed into Ubuntu, as well as Gentoo, a Linux distribution that builds its packages from source. But expanding their presence within Linux is just one part of the future that BLIS developers see on the horizon. Some of the graduate students on the team have achieved significant success with augmenting the BLIS approach for the machine learning space, while others have ported its fundamental ideas to GPUs. The team always has an ear to the ground with their friends in industry and academia, listening to see what they think will become important in the future. “We continue to be surprised at how many other research directions that BLIS has spawned,” says Van Zee. “We’re still chasing down those leads to this day, seven years after the framework was born.”