A team comprising Texas Computer Science (TXCS) Ph.D. student Santhosh Ramakrishnan, postdoctoral researcher Ziad Al-Halah, and TXCS Professor Kristen Grauman recently won first place in the 2020 Habitat visual navigation challenge held at the Conference on Computer Vision and Pattern Recognition (CVPR).

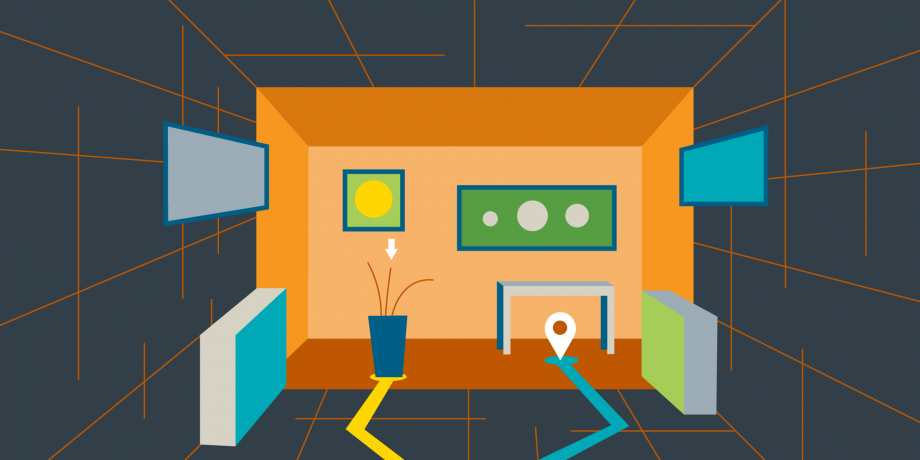

The event consisted of two challenges: ObjectNav, which required an agent to find a specific object in an unknown environment—such as locating a fireplace in a room—and PointNav, which required an agent to navigate to a target coordinate in an unknown environment. ObjectNav focused on recognizing objects and commonsense reasoning about object semantics, like knowing that a fireplace is typically located in the living room. PointNav, in comparison, focused on simulation realism and sim2real predictivity: the ability to predict a model's navigational performance when used by a real-robot by examining its performance in a simulation. The TXCS team won the PointNav side of the challenge.

Several teams competed against each other as they tried to design a learning agent that can enter an unfamiliar environment and quickly learn how to navigate the space while performing an “intelligent search to find a given location or object.” Ramakrishnan, a third-year Ph.D. student, further explained the challenge: “[the agent] doesn’t have a map of the environment, it knows nothing about it. It’s given [a coordinate] that it needs to get to very quickly, and success is measured by whether or not it reaches the point and how quickly it reaches the point.”

This challenge was serendipitous for Ramakrishnan, who has been working with Professor Grauman for three years and whose research is primarily focused on the intersection of computer vision and reinforcement learning. He worked on “Occupancy Anticipation for Efficient Exploration and Navigation” with the UT Austin Computer Vision Group before entering the challenge. As opposed to the typical approach in visual navigation, which focuses on building a map by documenting what the agent can see, the paper explores a different method: predicting the things that aren’t immediately seen.

Ramakrishnan explained the research in terms of a bedroom. When entering a bedroom, agents could easily perceive objects such as a floor or a bed. But what about objects under the bed, or behind it? “If I’m entering a bedroom and I don’t see everything behind the bed, I can still guess that there’s going to be some amount of floor space behind the bed because I would have to get on it.” This research helps autonomous agents navigate their environment more efficiently by predicting the unseen. The work was ideal for tackling the PointNav challenge. “We thought that we might try out the idea in this particular challenge because it fits well,” he said.

“It was interesting to bridge the gap between work at the research level versus work that needs to function well at the level of competition. You’re competing with people who are working on the same problem at the same time. In such an event, everyone is at their best. The most rewarding part [of entering the challenge] was pushing the boundaries of what a method can do and get the best performance that they could,” Ramakrishnan said.